Deadlock in OS

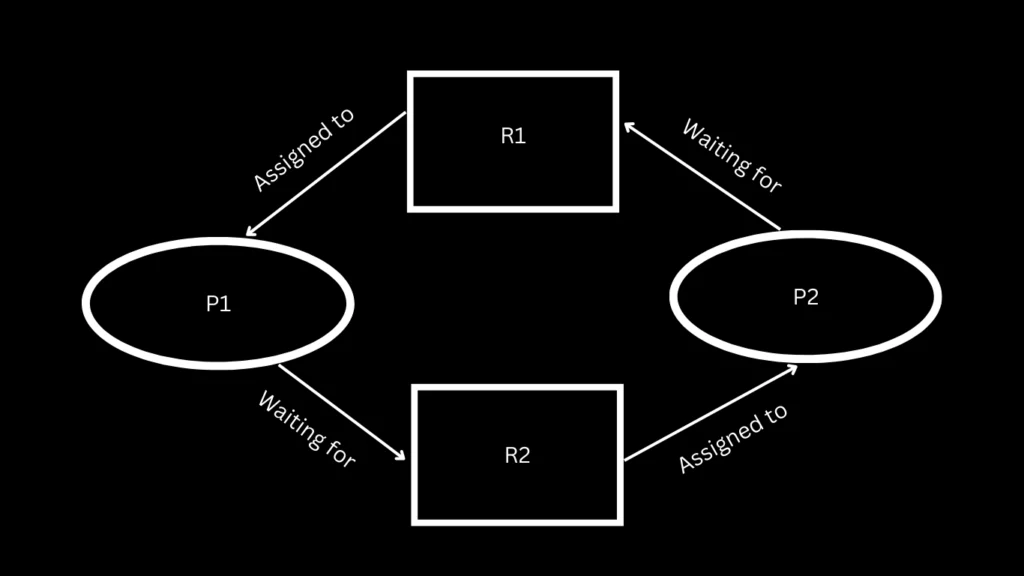

Deadlock in operating system refers to a situation where two or more processes are unable to proceed because each is waiting for the other to release a resource.

Essentially, the processes are stuck in a circular waiting condition, where each process holds a resource and is waiting for another resource acquired by another process. As a result, none of the processes can move forward, leading to a state of deadlock.

For example, in the diagram below, we can consider a scenario involving two processes, P1 and P2, along with two resources, R1 and R2:

- Initial State:

- P1 holds R1 and requests R2.

- P2 holds R2 and requests R1.

- Execution Steps:

- P1 releases R1 and waits for R2 to be released by P2.

- P2 releases R2 and waits for R1 to be released by P1.

- Deadlock:

- Now, neither process can proceed because each is waiting for the resource held by the other.

This article explores the conditions that lead to deadlock in OS, provides example, and discusses strategies for prevention.

Conditions for Deadlock

Four conditions, known as the “Deadlock Detection” model, must be present for a deadlock to occur:

Mutual Exclusion

Mutual exclusion is a concept in operating system and computer science that ensures only one process or thread can access a critical section of code at any given time.

- Critical Section: The critical section is a part of the program where shared resources, such as variables or data structures, are accessed or modified.

- Objective: The primary goal of mutual exclusion is to prevent concurrent access to shared resources, minimizing the risk of data corruption and unexpected behavior.

- Synchronization Mechanism: Mutexes (mutex locks) are commonly used to implement mutual exclusion. A mutex acts as a gatekeeper for a critical section, allowing only one process or thread to enter it at a time.

- Purpose: By enforcing mutual exclusion, mutexes help prevent race conditions and maintain the integrity of shared resources in a concurrent computing environment.

- Mutex Operations:

lock(mutex): Ensures exclusive access to the critical section, preventing other processes or threads from entering.unlock(mutex): Releases the mutex, allowing other processes or threads to acquire it and enter the critical section when needed.- Example Pseudocode:

// Shared resource shared_variable = 0 // Mutex initialization mutex = create_mutex() // Process or thread 1 lock(mutex) // Critical section shared_variable = shared_variable + 1 unlock(mutex) // Process or thread 2 lock(mutex) // Critical section shared_variable = shared_variable * 2 unlock(mutex)

Hold and Wait

Hold and Wait specifically refers to the situation where a process holds resources (locks) and at the same time is waiting for additional resources. If every process in a system holds at least one resource and is waiting for another resource acquired by some other process, it can lead to a deadlock.

For example, consider two processes, A and B. If A holds Resource 1 and requests Resource 2 while B holds Resource 2 and requests Resource 1, a situation of “Hold and Wait” is created. If this pattern extends to multiple processes forming a circular chain, and each process is waiting for a resource held by the next process in the chain, a deadlock can occur.

Preventing or addressing the “Hold and Wait” condition is one of the strategies to avoid deadlock in operating system. One approach is to require a process to release all its currently held resources before requesting new ones. This way, the system can ensure that a process never holds a resource while waiting for another. However, this approach may not always be practical or efficient. Various deadlock avoidance and detection algorithms exist to handle these situations in a more sophisticated manner.

No Preemption

No Preemption is one of the necessary conditions for a deadlock to occur in an operating system. It refers to the principle that resources cannot be forcibly taken away from a process. Once a process holds a resource, it cannot be preempted or forcibly deprived of that resource until it voluntarily releases it.

In the context of deadlock, the “No Preemption” condition contributes to the overall deadlock scenario. When a process is holding a resource and needs another resource currently held by a different process, it may enter a state of deadlock if it cannot obtain the second resource. If preemption were allowed, the operating system could forcibly take the resource from the first process, potentially resolving the deadlock.

However, in systems adhering to the “No Preemption” principle, such intervention is not allowed. Processes must release resources voluntarily, and if a process cannot proceed because it is waiting for a resource held by another process, and that process is not releasing the resource, a deadlock may occur.

This condition is part of the broader set of conditions, often summarized as the “Deadlock Detection” model, which includes Mutual Exclusion, Hold and Wait, No Preemption, and Circular Wait. All these conditions must be satisfied for a deadlock to take place in an operating system. Operating system use various strategies, such as deadlock detection algorithms and prevention mechanisms, to manage and avoid deadlock effectively.

Example:

Imagine two processes, P1 and P2, along with two resources, R1 and R2. If P1 holds R1 and needs R2, and P2 holds R2 and needs R1, a deadlock situation can arise if neither process releases its current resource.

- Initial State:

- P1 holds R1.

- P2 holds R2.

- Requests:

- P1 requests R2.

- P2 requests R1.

- Deadlock Scenario:

- P1 cannot forcibly take R2 from P2, and vice versa, due to the “No Preemption” condition.

- Both processes are now waiting for the other to release the resource they need, leading to a deadlock.

Here’s a simplified representation of the scenario:

Initial State: P1 holds R1 P2 holds R2 Requests: P1 requests R2 P2 requests R1 Deadlock: P1 is waiting for R2, held by P2 P2 is waiting for R1, held by P1

In this example, the absence of preemption means that the system cannot break the impasse by forcefully taking resources from processes. As a result, both processes are stuck waiting for resources held by the other, leading to a deadlock.

Circular Wait

Circular wait is one of the necessary conditions for a deadlock to occur in an operating system. It happens when a set of processes are each waiting for a resource held by the next process in the set, forming a circular chain. In other words, Process A is waiting for a resource held by Process B, Process B is waiting for a resource held by Process C, and so on, until Process N is in a state of anticipation, waiting for a resource currently held by Process A.

Example:

Let’s consider a simple example with three processes (P1, P2, and P3) and three resources (R1, R2, and R3). The circular wait scenario can be represented as follows:

- Process P1 is waiting for a resource held by P2:

- P1 → R2

- Process P2 is waiting for a resource held by P3:

- P2 → R3

- Process P3 is waiting for a resource held by P1:

- P3 → R1

Now, if you connect the dots, you see a circular chain: P1 → P2 → P3 → P1. Each process is waiting for a resource held by the next process in the set, creating a circular dependency.

This situation leads to a deadlock when each process in the cycle is holding the resource it needs to proceed but is unable to do so because it is waiting for the next process to release the resource it holds.

In real-world scenarios, this could occur in various resource allocation situations, such as processes waiting for access to shared databases, network resources, or any other resource that must be acquired exclusively. To prevent deadlock, operating system use various techniques like resource allocation graphs and deadlock prevention algorithms.

Examples of Deadlock

- Resource Allocation Graph:

- Consider a scenario with three processes (P1, P2, P3) and three resources (R1, R2, R3).

- Here, a circular wait exists (P1 → R2 → P2 → R3 → P3 → R1 → P1), meeting the conditions for a deadlock.

- Banker’s Algorithm:

- In a banking system, customers request loans (resources). The bank must ensure that granting loans will not lead to a deadlock.

- If a customer cannot obtain all required resources, the system denies the request to prevent a potential deadlock.

JavaScript Simulation

In a simplified JavaScript simulation, we model processes and resources. Processes request and release resources, and resources are allocated based on availability. While this simulation is abstract and doesn’t mirror a real operating system environment, it provides a hands-on illustration of resource allocation dynamics.

class Resource {

constructor(name) {

this.name = name;

this.isAllocated = false;

}

allocate() {

if (!this.isAllocated) {

this.isAllocated = true;

console.log(`Resource ${this.name} allocated`);

return true;

} else {

console.log(`Resource ${this.name} is already allocated`);

return false;

}

}

release() {

if (this.isAllocated) {

this.isAllocated = false;

console.log(`Resource ${this.name} released`);

} else {

console.log(`Resource ${this.name} is not allocated`);

}

}

}

class Process {

constructor(name, resources) {

this.name = name;

this.resources = resources;

}

requestResource(resource) {

console.log(`${this.name} is requesting ${resource.name}`);

if (resource.allocate()) {

this.resources.push(resource);

console.log(`${this.name} received ${resource.name}`);

} else {

console.log(`${this.name} is waiting for ${resource.name}`);

}

}

releaseResources() {

console.log(`${this.name} is releasing resources`);

this.resources.forEach((resource) => {

resource.release();

});

this.resources = [];

}

}

// Example usage

const resource1 = new Resource('R1');

const resource2 = new Resource('R2');

const resource3 = new Resource('R3');

const process1 = new Process('P1', []);

const process2 = new Process('P2', []);

// Process 1 requests resources

process1.requestResource(resource1);

process1.requestResource(resource2);

// Process 2 requests resources

process2.requestResource(resource2); // Process 2 waits for resource2

// Process 1 releases resources

process1.releaseResources(); // Resources R1 and R2 are released

// Process 2 can now acquire resource2

process2.requestResource(resource2); // Process 2 receives resource2

In this example, Resource represents a shared resource, and Process represents a process requesting and releasing resources.

The console output demonstrates the allocation and release of resources and the waiting state when a resource is not immediately available.

Keep in mind that this is a simplistic representation and doesn’t fully capture the complexity of a real operating system environment.

Preventing Deadlock

Deadlock Avoidance

Deadlock Avoidance is a method used in operating system to prevent the occurrence of deadlock by dynamically analyzing the system’s state and resource allocation requests. Unlike deadlock detection and recovery, which come into play after a deadlock has occurred, avoidance aims to ensure that the system stays in a safe state, making it impossible for a deadlock to happen.

A frequently employed algorithm to prevent deadlock is the Banker’s Algorithm. Let’s delve into the key concepts behind deadlock avoidance using this algorithm and illustrate with an example.

Banker’s Algorithm

The Banker’s Algorithm is based on the concept of “safe state” and ensures that resource allocations will not lead to a deadlock. It works by checking whether granting a resource request will keep the system in a safe state, i.e., it will still be able to satisfy the resource needs of all processes.

Key Data Structures:

- Available: Vector representing the number of available resources of each type.

- Max: Matrix indicating the maximum demand of each process.

- Allocation: Matrix displaying the current resource assignments for each process.

- Need: Matrix indicating the remaining resources needed by each process.

Algorithm Steps:

- When a process requests resources, the system checks if the requested resources are less than or equal to the available resources and the maximum resources a process can claim (Need matrix).

- If the system grants the resources, it updates the Allocation and Need matrices.

- It then checks if the system remains in a safe state after this resource allocation.

- If the system is still safe, the resource allocation is allowed; otherwise, it is denied.

Example:

Let’s consider a simplified scenario with three processes (P1, P2, P3) and three resource types (A, B, C).

Initial State:

- Available: A(3), B(3), C(2)

- Max:

- P1: A(7), B(5), C(3)

- P2: A(3), B(2), C(2)

- P3: A(9), B(0), C(2)

- Allocation:

- P1: A(0), B(1), C(0)

- P2: A(2), B(0), C(0)

- P3: A(3), B(0), C(2)

Request:

- P2 requests A(2), B(1), C(0)

Check:

- Check if the request is less than or equal to the available resources.

- If granted, check if the system remains in a safe state.

Outcome:

- If the system remains safe after allocating the requested resources, the request is granted.

- If not, the request is denied, and the system stays in its current state.

Resource Allocation

Resource Allocation Graphs (RAG) are powerful visual tools used to analyze and prevent deadlock. These graphs provide a clear representation of the relationships between processes and resources, helping to identify potential deadlock and implement preventive measures. Let’s dive into what Resource Allocation Graphs are, their components, and how they work with an illustrative example.

Resource Allocation Graph Components:

- Processes (Nodes): Represented by circles or nodes, processes in the graph signify entities in the system that request and hold resources.

- Resources (Nodes): Depicted as rectangles or nodes, resources represent the objects that processes contend for and may hold.

- Edges: Arrows between nodes indicate relationships. Two types of edges are used:

- Request Edge (R-edge): Indicates a process requesting a resource.

- Assignment Edge (A-edge): Represents a resource assigned to a process.

Example Resource Allocation Graph:

Consider a simplified scenario with three processes (P1, P2, P3) and three resources (R1, R2, R3). We’ll represent the relationships using a Resource Allocation Graph.

In this example:

- P1 is holding R1 (A1 edge) and requesting R2 (R2 edge).

- P2 is holding R2 (A2 edge) and requesting R3 (R3 edge).

- P3 is holding R3 (A3 edge) and requesting R1 (R1 edge).

Analyzing the Resource Allocation Graph:

To prevent deadlock, we need to examine the graph for the existence of cycles that contain only Request Edges. If such cycles are found, a potential deadlock is identified.

In our example, a cycle is present: P1 is requesting R2, P2 is requesting R3, and P3 is requesting R1. This circular wait scenario suggests a possible deadlock.

Preventing Deadlock using Resource Allocation Graphs

Once a potential deadlock is identified in the graph, prevention strategies can be implemented:

- Breaking the Circular Wait: Intervene to break the circular wait condition. This can involve releasing a resource from one of the processes or preemptively taking a resource from a process to satisfy another’s request.

- Dynamic Resource Allocation: Dynamically allocate resources in a way that ensures safety, following principles like the Banker’s Algorithm. This involves assessing the impact of potential resource allocations before granting requests.

Timeouts and Reclamation

Timeouts and reclamation are strategies employed in operating system to prevent deadlock by introducing a time-based mechanism to detect and recover from potential deadlock situations. Let’s delve into each concept with an example:

Timeouts

Timeouts involve setting a limit on how long a process is allowed to wait for a particular resource. If the resource is not granted within the specified time frame, the system assumes a potential deadlock and takes corrective action. This can involve releasing resources held by a process or interrupting the waiting process to prevent a prolonged deadlock.

Example:

Consider two processes, P1 and P2, competing for resources. Each process is allocated one resource but needs an additional resource held by the other. If a process doesn’t receive the requested resource within a defined timeout period, the system assumes a potential deadlock and takes action.

Time t=0: P1 requests R2 Time t=1: P2 requests R1 (Timeout Period Elapses) Time t=5: System detects deadlock, releases resources held by P1 and P2.

In this example, if P1 and P2 don’t obtain the requested resources within the timeout period, the system intervenes, breaking the potential deadlock by releasing the resources and allowing other processes to proceed.

Reclamation

Reclamation involves forcibly reclaiming resources from a process that is suspected to be causing or contributing to a deadlock. This is a more proactive approach compared to timeouts, as the system actively revokes resources from a process that might be holding up the system due to deadlock.

Example:

Consider a scenario where a process P1 holds resources R1 and R2. Meanwhile, another process P2 is waiting for resource R2 held by P1. If the system suspects a deadlock, it can forcefully reclaim resources from P1 to break the potential deadlock.

Time t=0: P1 holds R1 and R2 Time t=1: P2 requests R2 (Deadlock Suspected) Time t=5: System forcefully reclaims resources R1 and R2 from P1.

By reclaiming resources from P1, the system aims to resolve the potential deadlock and allow other processes, like P2, to make progress.

Conclusion

Deadlock pose a significant challenge in operating system, and understanding their causes is crucial for effective prevention.

By employing strategies like deadlock avoidance, resource allocation graphs, and timely interventions, operating system can minimize the occurrence and impact of deadlock, ensuring the smooth execution of processes.

In parallel, exploring advanced scheduling algorithms in OS becomes pivotal as ongoing research aims to develop even more robust methods to handle and prevent deadlocks in complex computing environments.